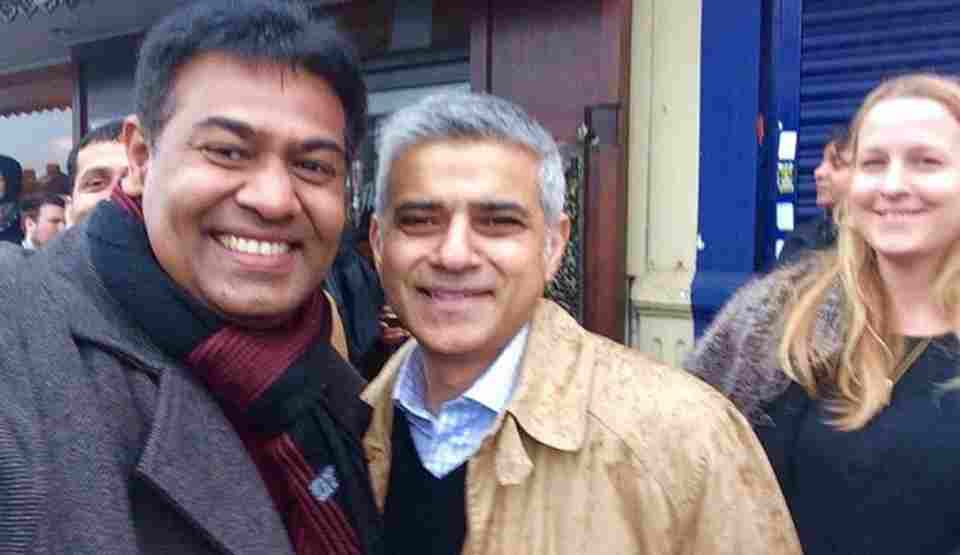

An immigration barrister faces potential disciplinary proceedings after being accused of using artificial intelligence tools like ChatGPT to draft legal submissions containing fictitious case citations, a tribunal has ruled.

Chowdhury Rahman appeared before the Upper Tribunal representing two Honduran sisters seeking asylum, but raised serious concerns when he cited cases that Judge Mark Blundell discovered were either “entirely fictitious” or “wholly irrelevant” to the legal arguments being made.

The Asylum Case

The case involved two sisters, aged 29 and 35, who fled Honduras and arrived at Heathrow Airport in June 2022. They claimed asylum based on threats from the notorious MS-13 gang, also known as Mara Salvatrucha. According to their testimony during screening interviews, the gang wanted them to become “their women” and had threatened to kill both the sisters and their families if they refused.

The Home Office rejected their asylum application in November 2023, stating it did not believe the women had genuinely been targeted by the gang. A first-tier tribunal judge later dismissed their appeal, finding insufficient evidence that they had been subject to adverse attention from gangs while in Honduras.

The Appeal and Discovery

When Rahman brought the case to the Upper Tribunal, he argued the previous judge had failed to properly assess credibility, made errors in evaluating documentary evidence, and failed to consider risks under Article 3 of the European Convention on Human Rights, which prohibits torture. He also raised concerns about procedural fairness and internal relocation options.

However, Judge Blundell rejected all these arguments, finding no errors of law in the original judgment. More troubling, he discovered significant problems with Rahman’s written submissions.

Of the twelve legal authorities cited in the paperwork, Judge Blundell found that “some of those authorities did not exist and that others did not support the propositions of law for which they were cited.” He listed ten examples in his judgment, noting that Rahman “appeared to know nothing about any of the authorities he had cited” and had not intended to actually reference these decisions during his oral submissions.

Attempted Explanations

When confronted, Rahman claimed he had used “various websites” to conduct his research and attributed the inaccuracies to his “drafting style.” He suggested there might have been some “confusion and vagueness” in his submissions and said he might need to “construct sentences in a more liberal way” in future.

Judge Blundell firmly rejected these explanations, stating: “The problems which I have detailed above are not matters of drafting style. The authorities which were cited in the grounds either did not exist or did not support the grounds of which were advanced.”

Judge’s Findings

In unusually strong language, Judge Blundell concluded it was “overwhelmingly likely” that Rahman had used generative AI to formulate his grounds of appeal and then “attempted to hide that fact” during the hearing.

“He has been called to the Bar of England and Wales, and it is simply not possible that he misunderstood all of the authorities cited in the grounds of appeal to the extent that I have set out above,” the judge wrote. “Even if Mr Rahman thought, for whatever reason, that these cases did somehow support the arguments he wished to make, he cannot explain the entirely fictitious citations.”

The judge expressed particular concern that Rahman “did not appear to understand the gravity of the situation” and failed to grasp how his misleading submissions had wasted significant judicial time.

Potential Consequences

Judge Blundell stated he is “minded” to refer Rahman’s conduct to the Bar Standards Board, which regulates barristers in England and Wales and has the power to impose sanctions ranging from reprimands to being struck off.

The case highlights growing concerns about the use of AI in legal practice, particularly when practitioners fail to verify the accuracy of AI-generated content. Judge Blundell noted that one of the cases cited in Rahman’s submissions “has recently been wrongly deployed by ChatGPT in support of similar arguments,” suggesting this may be part of a broader pattern of AI tools generating plausible-sounding but fictitious legal citations.

The asylum appeal for the two Honduran sisters was ultimately dismissed.